With help from Derek Robertson and Mark Scott

A person types on an illuminated computer keyboard. Sean Gallup/AP Photo

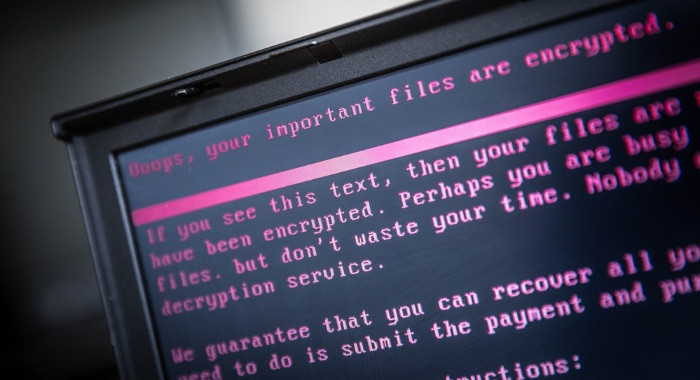

Soon, the only thing that can stop an AI-powered cyberattack might be an AI-powered cyber-defense.

In the not-too-distant future, some analysts believe that cybersecurity will largely consist of a “cat-and-mouse game” between autonomous AI hackers and the autonomous systems set up to thwart them, co-evolving in opposition to each other, with little human involvement.

Yesterday, President Joe Biden took a big, if initial, step towards that future with a section of his sweeping executive order that instructs the secretaries of defense and homeland security to set up a program that uses AI to more efficiently scan government computer systems for security vulnerabilities.

The directive is meant to build on an existing project of the Pentagon’s Defense Advanced Research Projects Agency, the AI Cyber Challenge, launched in August to find bugs in open-source code with the help of large language models.

Of course, an AI that finds vulnerabilities can be used to go on the cyberoffense as well.

Gregory Falco, a professor at Cornell whose work has been funded by DARPA, NASA and the U.S. Space Force, tells DFD that the AI-on-AI future of cybersecurity has already dawned, and that he’s in the middle of it.

DFD caught up with Falco to discuss the disconnect between D.C. and developers, China’s AI-powered hacking, and the tools he’s developing to let an AI “hack back” in outer space.

What do you make of yesterday’s executive order?

The security aspects of the executive order are meant to be left pseudo-ambiguous. The cybersecurity policy we have today is very abstract. It’s not directed at very specific technical specifications.

Why is that?

My understanding generally of policymaking and cybersecurity is that all language is always left ambiguous because the folks who are making the language don’t have the answer.

For example, right now we are working to develop new standards for space system cybersecurity. I’m the chair of the IEEE [Institute of Electrical and Electronics Engineers] standard for space system cybersecurity. It’s an international technical standard. And we’re trying to do this because we know that there’s an expectation that we’re going to revitalize cybersecurity guidance in the national policy for space systems, which are often autonomous or highly automated systems.

And the reality is that, whenever we have policy guidance come out, it’s super nonspecific when it comes to cyber. And then it’s kind of left up to the technical community to figure it out. And that kind of causes a bit of a mess.

How do you make the process work better?

There’s a huge disconnect between Washington, D.C., and most of the developers in the country, who’re not based in Washington, D.C. — and they just don’t reach that audience, oftentimes, who are the development community. They’re reaching the policy audience, sometimes parts of the East Coast, but usually just their little bubble of the Beltway

When it comes to using AI for hacking, what does that actually look like?

A great example of that in very recent history has been with the advent of ChatGPT, where we have the hacker community trying to develop exploits using ChatGPT by providing a very basic code base to play off, saying, “Find vulnerabilities based off of this specification, and then use these exploits that are provided in these libraries to go do this bad thing.”

In the past, you had to develop very fast system models of a given environment, and then come up with bespoke planning algorithms to be able to break into these systems in an automated way. With the advent of AI, we’ve been able to be able to break into systems so much more easily. And this is a big concern, obviously.

You’ve worked with NASA. Are you using AI for cybersecurity in outer space

Yes, we are.

There’s a program that we ran up until a year or so ago, I called it space Iron Dome. And what this was about was developing reinforcement learning. it’s a machine learning technique that learns from its mistakes, to improve, to be able to attack a system back if someone attacks.

So if there is a cyberattack against A space vehicle, it’s a means of conducting what we call a “hack back” in space, preventing someone from hacking into that space system. But this is an entirely human-out-of-the-loop system.

That sounds tricky.

It’s using this very probabilistic technique. If we go that wrong, you can imagine. So you need a lot of assurance built into these reinforcement learning models for space systems.

One thing that came to mind when I read this executive order is that it seems like a step towards a cybersecurity world in which defensive and offensive AIs are co-evolving against each other with humans largely out of the loop. Is that a future you see?

That’s not a future. It’s already happening.

It’s happening on a pretty basic level today, where you have these bots that are doing bad things, and then you have AI systems that are trying to combat these blunt force bots that are messing with our digital infrastructure.

But the techniques are evolving both from the adversary’s and from the defender’s standpoint.

One of the fundamental parts of my dissertation, which was a number of years ago at MIT, was on developing these automated attack mechanisms for critical infrastructure systems. And this was in order to figure out as a defender, how do I anticipate what an attacker is going to do to my infrastructure. Then we applied this to space system and application at NASA’s Jet Propulsion Laboratory, because that was such a critical and expensive asset that we were working on. That was back in 2016. Things have evolved considerably.

These AI systems are trying different techniques against other systems. And if they fail, then they change their techniques. It’s become an incredibly effective way to evolve machine learning models.

Does that creep you out at all?

No, because this is the natural evolution of how technology is evolving right now.

Instead of worrying, we should just take advantage of these tools, and figure out how to use them to our benefit. And kind of continue to play that cat-and-mouse game, but on the defensive side.

Is this “cat-and-mouse” game playing out in the lab or in the real world?

Most of these techniques are being played out in a lab environment right now. The ones that I can talk about. There’s obviously a whole bunch of interest in figuring out how to test this in the real world.

Is there not a concern that this could lead to something equivalent to gain-of-function research and a lab leak in the biological context — that these techniques create the most powerful hacking tool ever, and it gets into the wrong hands. I think that’s still a bit sci-fi. But you can’t really discount sci fi, because sci-fi is usually the inspiration for a lot of research and development.

What exactly are U.S. adversaries doing?

Some of the most important and interesting AI work in the world right now is coming out of China.

I’m trying to make sure I’m not disclosing anything I need to not disclose. But there’s definitely reports of China using these AI systems in offensive capability for offensive operations, and these are in partnership with other adversaries,

AI and cyber is the great leveler, right? It evens the playing field for all of these nation-states. It’s an asymmetric system, where anyone is able to use these capabilities, once the models are made. It really comes down to data, and whoever has the most data to train the models, whoever has the most educated workforce to spend the time to build these systems. So we’re in a national security race right now against our adversaries.

whither open-source

Amid the litany of artificial intelligence announcements this week — including the United Kingdom’s two-day AI Safety Summit starting on Wednesday — advocates of so-called open source models aren’t feeling the love. In an open letter set to be published Wednesday — which was shared exclusively with Digital Future Daily — leading voices from that community (which advocates for tech that can be used by all) warn politicians are too focused on the latest, most popular walled-off AI systems.

“We are at a critical juncture in AI governance,” according to the signatories, which include Meta’s chief AI scientist, Yann Lecun; Audrey Tang, Taiwan’s minister of digital affairs; and Mark Surman, executive director of the Mozilla Foundation, the open-source tech company that quarterbacked the letter. Nobel Peace Prize winner Maria Ressa and former French Digital minister Cedric O also signed the letter.

“Yes, openly available models come with risks and vulnerabilities,” the letter acknowledges. “However, we have seen time and time again that the same holds true for proprietary technologies — and that increasing public access and scrutiny makes technology safer, not more dangerous.”

It’s not surprising that leaders of open source AI want to shoehorn themselves into the discussion. Currently, a lot of the oxygen has been taken up by the big names like Microsoft, Alphabet and OpenAI. It’s also pretty self-serving for those behind the letter to call for greater support of open-sourced artificial intelligence because it serves their own (often, commercial) purposes.

But as the White House’s recent executive order on AI highlighted, promoting greater competition around this technology will be key. And that, inevitably, requires smaller players — often with fewer resources — to be able to join forces via open source large language models and other forms of AI to take on the big players.

“We need to invest in a spectrum of approaches — from open source to open science — that can serve as the bedrock for… lowering the barriers to entry for new players focused on creating responsible AI,” the letter continued.

This isn’t just about those with vested interest promoting one form of AI over another. In a recent Stanford University study that looked at how transparent the leading generative AI models are, the researchers discovered that three of the top four systems that performed the best were those with an open-sourced background.

Granted, none of the systems tested in the Stanford study performed great. But the likes of Meta, BigScience and Stability AI, which favor open models, offered greater transparency on how their systems worked versus companies that preferred to keep everything walled off. That includes publishing the types of data used to power these systems; how the AI models were used in the wild; and disclosures on everything from the types of labor used to build the systems to how potential risks were mitigated.

“Open source models are roughly as good as closed models,” said Kevin Klyman, a tech policy researcher at Harvard who co-authored the Stanford University study, in reference to the level of sophistication offered by open source AI systems versus their closed-off competitors. But on transparency: “open developers still outperform closed developers.” — Mark Scott

a wish list for the u.k. ai summit

A handful of members of Congress have a message for Vice President Kamala Harris to carry with her as she jets off to the United Kingdom for its upcoming AI summit.

In a letter exclusively shared with Digital Future Daily, Rep. Sara Jacobs (D-Calif.) and Sen. Ed Markey (D-Mass.) along with a group of co-signers are giving Harris extra momentum to promote the “fundamental rights and democratic values” they say are well-represented in the Biden White House’s Blueprint For an AI Bill of Rights.

“As you participate in the Summit, we ask that you continue to uplift the principles of fairness, accountability and safety, while promoting an inclusive definition of AI safety,” they write.

“…We hope you will emphasize the impact of algorithmic decision-making on access to opportunities and critical needs, including housing, credit, employment, education, and criminal justice,” they continue, urging the White House and global leaders to include “marginalized communities” not usually at the table for high-level tech policy discussions. — Derek Robertson

Tweet of the Day

THE FUTURE IN 5 LINKS

The New York Times’ Kevin Roose analyzes the Biden AI executive order.Lasers have become a focal point (sorry) of the U.S.-China trade war.AMD’s new AI chip is hoping to make a big splash on the stock market.An AI startup raised $200 million for autonomous flying military tech.Generative AI is playing a surprisingly subtle role in the Israel-Hamas conflict.

Stay in touch with the whole team: Ben Schreckinger ([email protected]); Derek Robertson ([email protected]); Mohar Chatterjee ([email protected]); Steve Heuser ([email protected]); Nate Robson ([email protected]) and Daniella Cheslow ([email protected]).

If you’ve had this newsletter forwarded to you, you can sign up and read our mission statement at the links provided.